The Interrater Reliability Score Report shows the interrater reliability for all questions at the selected screening level. The report shows two separate interrater reliability scores: 1) the Fleiss’ Kappa Score, which is an interrater agreement measure between three or more raters; and 2) the Cohen’s Kappa Score, which is a quantitative measure of reliability for two raters that are rating the same thing, corrected for how often that the raters may agree by chance. The contents of the report reflect the current status of the review questions and, numbers and scores will change as conflicting answers are resolved or screening progresses

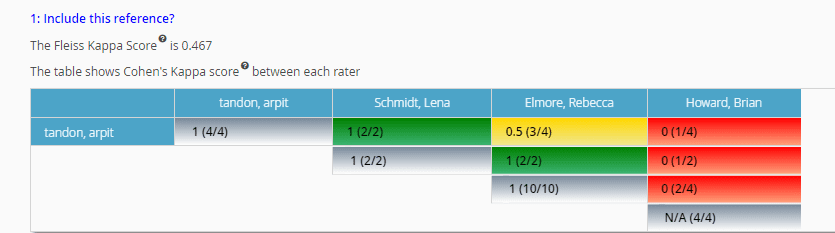

In the example shown below, you can see that the Fleiss’ Kappa score (shown above the table) is 0.467, which indicates there is moderate disagreement across screeners on the project for Question 1 (“Include this reference?”). The Fleiss’ Kappa Score is a statistical measure for assessing the reliability of agreement between three or more raters.

The Cohen’s Kappa score between each rater is shown in the table below for Question 1 (“Include this reference?”). The number in each cell shows the Cohen’s Kappa scores between two reviewers. The number in parentheses shows the number of references where the reviewers agree, and the denominator shows the total number of references screened by both reviewers. For example, you can see that Rebecca Elmore and Arpit Tandon were not in total agreement (three out of four references screened were in agreement), with a Cohen’s Kappa score of 0.5 between them.

The Interrater reliability report can be exported as an .xlsx file.